Check out some of the recent projects from the Interactions Lab below, organised into four themes: Wearables, Tangible Interaction, Cognition and Embodiment and Security and Social Computing. For a complete, comprehensive list of our research outcomes and interests, head over to the publications page.

You can also see some information about our lab and the equipment and facilities we have for realising projects in the areas of physical and tangible computing.

How to select virtual objects or targets when interacting with smartglasses? This project describes two studies that empirically contrast six different selection mechanisms that can be combined with head based pointing on a pair of smartglasses: a keypress from a handheld controller, an on-HMD keypress, dwell, voice, gesture and motion matching. Rather than pick winners, the paper seeks to tease apart the advantages and disadvantages of the different techniques. For example, handheld controllers are fast, dwell is accurate and motion matching decouples selection from targeting, which may have advantages for distant targets on the periphery of the display.

Read more: Augusto Esteves, Yonghwan Shin and Ian Oakley (in press). "Comparing Selection Mechanisms for Gaze Input Techniques in Head-mounted Displays". International Journal of Human-Computer Studies. [download article]

-

How can we type on an HMD? Using a mix of empirical and computational methods, we designed, developed and evaluated novel keyboard layputs for typing via touch sensors mounted on the finger nails. We also considered mobility as typing while on the go is a critical smart device use case. Our results show that nail typing works well when you are walking, that computationally optimized keyboard layputs provide benefits (10%-40%) and that people may be able to achieve text entry rates of as high as 31 WPM, close to the speeds attained during typing on a mobile phone. In the future, we may ditch the keyboard and simply type on our fingers.

Read more: DoYoung Lee, Jiwan Kim, and Ian Oakley. 2021. FingerText: Exploring and Optimizing Performance for Wearable, Mobile and One-Handed Typing. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems (CHI '21). Association for Computing Machinery, New York, NY, USA, Article 283, 1-15. [download article]

See more: Watch the teaser video on youtube

-

Can we use our finger nails as a touch surface to support HMD use? We develop a set of five touch sensitive finger nails and present a series of studies and evaluations of their potential to support interactive experiences: a design workshop to brainstorm interaction ideas; a qualitatative study that captures the comfort of a wide range of touches - 144 in total; and two studies that examine the speed and stability with which users can make touches to their nails. We conclude with recommendations for a final set of 29 viable tocuhes that are comfortable and can be performed accurately and rapidly.

Read more:DoYoung Lee, SooHwan Lee and Ian Oakley. "Nailz: Sensing Hand Input with Touch Sensitive Nails". In Proceedings of CHI'20, April 25-30, 2020, Honolulu, HI, USA. [download article]

Know more: Watch the teaser on YouTube or the coverage on the Arduino blog

-

Can we use touches to the face to enhance input on smartglasses? This project explores how to design input that leverages facial touches while remaining comfortable and socially acceptable for users to perform while in public spaces such as coffee shops. Building on an initial study that has users propose input primitives, we develop five design strategies (miniaturizing, obfuscating, screening, camouflaging and repurposing), construct two functional hand-to-face input prototypes and validate the designs by having perform input tasks in public.

Read more: DoYoung Lee, Youryang Lee, Yonghwan Shin, Ian Oakley (2018) "Designing Socially Acceptable Hand-to-Face Input" In Proceedings of ACM UIST'18, Berlin, Germany. [download article]

See more: Watch the project video on youtube or the UIST'18 presentation

-

How can we create haptic feedback for the head? Whiskers explores the use of state-of-the-art in-air ultrasonic haptic cues delivered to the face to provide tactile notifications and updates while you wear a pair of smartglasses. The work describes lab studies using a fixed prototype that establish baseline perceptual performance in tasks like localisation, movement and duration perception across three different face sites suitable for use with glasses: the cheek, the brow and just above the bridge of the nose.

Read more: Hyunjae Gil, Hyungki Son, Jin Ryong Kim, Ian Oakley (2018) "Whiskers: Exploring the Use of Ultrasonic Haptic Cues on the Face" In Proceedings of ACM CHI'18, Montreal, Canada.[download article]

-

How can you select UI buttons when wearing a Head Mounted Display (HMD)? SmoothMoves proposes target selection via smooth pursuits head movements. Targets move in systematic, predictable paths and SmoothMoves works by computing correlations between those paths and motions of the user's head while explicitly tracking those targets. When the link between head movements and motions of a specific target becomes sufficiently strong, it is selected. Smoothmoves frees up the hands while supporting rapid, reliable and comfortable interaction.

Read more: Augusto Esteves, David Verweij, Liza Suraiya, Rasel Islam, Youryang Lee, Ian Oakley (2017) "SmoothMoves: Smooth Pursuits Head Movements for Augmented Reality." In Proceedings of ACM UIST'17, Quebec City, Canada. [download article]

See more: Watch the project video on youtube or the UIST'17 presentation

-

How can you type a password on a pair of glasses? Wearable devices store more and more sensitive and personal information, making securing access to them a priority. But there limited input spaces make them a poor fit for traditional PIN or password input methods. GlassPass is a novel authentication system for smart glasses that uses spatiotemporal patterns of tapping input to compose a password. Studies indicate this design is well matched to the glasses form factor, password entry is quick, and passwords are easy to remember.

Read more: MD. Rasel Islam, Doyoung Lee, Liza Suraiya Jahan, and Ian Oakley. (2018) "GlassPass: Tapping Gestures to Unlock Smart Glasses." In Proceedings of Augmented Human 2018, Seoul, Korea.[download article]

-

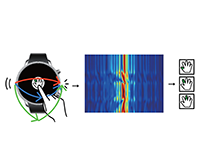

What can we achieve by sensing finger movements above smartwatches? Interactions on watches are challenging due to their small screens. This project explores use of active sonar signals to detect the finger (from thumb, index and middle) touching a smartwatch screen to enable richer interactions. For example, using a touch by the middle finger or thumb as a "right-click". We show that sonar responses can be used to classify the touching finger on a smartwatch with an accuracy of up to 93.7%.

Read more: Jiwan Kim and Ian Oakley. 2022. "SonarID: Using Sonar to Identify Fingers on a Smartwatch." In CHI Conference on Human Factors in Computing Systems (CHI '22), April 29-May 5, 2022, New Orleans, LA, USA. ACM, New York, NY, USA, 10 pages. [download article]

See more: Watch the teaser video on youtube

-

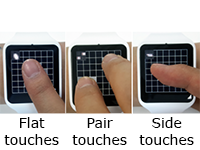

How comfortable is touch input on smartwatches? Due to their small screens, many researchers tried to enhance input on smartwatches through advanced forms of touch such as making multiple simultaneous inputs, identifying the finger making an input or determining the angle of a touching finger. We argue that comfort is a key factor contributing to the viability of these input techniques and capture the first data on this issue for smartwatches. We show that, depending on what finger (or finger part) is used, different types of touch are more or less comfortable. We derive a set of design recommendations and interface prototypes for comfortable, effective smartwatch input.

Read more: Hyunjae Gil, Hongmin Kim, and Ian Oakley. 2018. Fingers and Angles: Exploring the Comfort of Touch Input on Smartwatches. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2, 4, Article 164 (December 2018), 21 pages. https://doi.org/10.1145/ 3287042 [download article]

-

How can we securely access smartwatches? Its hard to type a password on such a small touchscreen. Even the 10 buttons needed to enter PINs are tiny when displayed on a watch. This project explores performance in a PIN entry task on a watch and proposes PIC, an alternative design based on large buttons and chorded input using one or two fingers. We characterize performance, showing that although PIC can take a little longer to use than PIN, users make few errors and may also create more secure, less guessable, smartwatch passwords.

Read more: Ian Oakley, Jun Ho Huh, Junsung Cho, Geumhwan Cho, Rasel Islam and Hyoungshick Kim (2018). "The Personal Identification Chord". In ASIACSS'18, Songdo, Incheon, Korea.[download article]

Know more: Watch the presentation at the Samsung Security Tech Forum 2020 or check out the article at Money Today (Korean language)

-

How we identify the finger touching a smartwatch? This project looks at whether the touch contact regions generated by different fingers during interaction with a smartwatch are distinct from one another. Using raw data from the touch screen driver of a modified Android kernel, we build machine learning models to distinguish fingers and show how these can be used to create interfaces where different functions are assigned to different digits.

Read more: Gil, H.J., Lee, D.Y., Im, S.G. and Oakley, I. (2017) "TriTap: Identifying Finger Touches on Smartwatches." In Proceedings of ACM CHI'17, Denver, CO, USA. [download article]

Know more: Watch the teaser video from CHI 2017, or download source and binaries on github

-

Can we use the shape of our finger touch to control smart watches? We developed a watch-format touch sensor capable of capturing the contact region of a finger touch and used this platform to explore the kinds of contact area shapes that users can make. We also investigated the design space of this technique and propose a series of interaction techniques suitable for contact area input on watches.

Read more: Oakley, I., Lindahl, C., Le, K., Lee, D.Y. and Islam, R.M.D. "The Flat Finger: Exploring Area Touches on Smartwatches". In Proceedings of ACM CHI'16, San Jose, CA, USA. [download article]

See more: Watch the teaser video from CHI 2016

-

How can we quickly and easily control smart watches? This work explores how rapid patterns of two-finger taps can be used to issue commands on a smart watch. The goal of the this work is to design interfaces that give access to a wide range of functionality without requiring users to navigate through menus or multiple screens of information.

Read more: Oakley, I., Lee, D.Y., Islam, R.M.D. and Esteves, A. "Beats: Tapping Gestures for Smart Watches". In Proceedings of ACM CHI'15, Seoul, Republic of Korea. [download article]

See more: Watch the teaser video from CHI 2015

-

How can we interact with very small mobile or wearable computers? With next generation personal computing devices promising more power in smaller packages (such as smart watches or jewellery) interaction techniques need to scale down. This work explores interaction via an array of touch sensors positioned all around the edge of a small device with a front-mounted screen. This arrangement sidesteps the "fat-finger" problem, in which a user's digits obscure content and hamper interaction.

Read more: Oakley, I. and Lee, D.Y. (2014) "Interaction on the Edge: Offset Sensing for Small Devices". In proceedings of ACM CHI 2014, Toronto, Canada. [download article]

See more: Watch the teaser video from CHI 2014

How can we make reading on electronic devices better? We propose a tangible reading aid in the form of the eTab - a smart bookmark designed to scaffold and support advanced active reading activities such as navigation, cross-refereeing and note taking. The paper describes the design, implementation and evaluation of the eTab prototype on a standard Android tablet computer.

Read more: Bianchi, A., Ban, S.R. and Oakley, I. "Designing a Physical Aid to Support Active Reading on Tablets". In Proceedings of ACM CHI'15, Seoul, Republic of Korea. [download article]

See more: Watch the teaser video from CHI 2015

-

How can we sense multiple objects on and around current mobile devices? While prior work has shown that embedding a magnet in one object allows it to be tracked, scaling this up to multiple objects has proven challenging. This paper proposes a solution - spinning magnets. By looking for the systematic variations in magnetic field strength this causes, we are able to infer the location of each of a set of tokens.

Read more: Bianchi, A. and Oakley, I. "MagnID: Tracking Multiple Magnetic Tokens". In Proceedings of ACM TEI'15, Stanford, CA, USA. [download article]

Know more: Watch the video from TEI 2015, check out the Hackaday article or the source on github

-

How can physical, tangible interfaces be introduced to everyday computers? This work explores how mobile devices can be used as platforms for tangible interaction through the design and construction of eight magnetic appcessories. These are cheap, robust physical interfaces that leverage magnets (and the magnetic sensing built into mobile devices) to support reliable and expressive tangible interactions with digital content.

Read more: Bianchi, A and Oakley I. (2013) "Designing Tangible Magnetic Appcessories". In proceedings of ACM TEI 2013, Barcelona, Spain. [download article]

Know more: Watch the video on Youtube or check out the articles on Gizmodo, Slashdot and Make Magazine

Physical, tangible interfaces are compelling, but what makes them better than conventional graphical systems? One answer might be that they facilitate epistemic action - the manipulation of external props as tools to simplify internal thought processes. To explore this idea, we developed the ATB framework, a video coding instrument for fine-grained assessment of epistemic activity. We present an initial user study that suggests it is reliable and that the number and type of epistemic actions a user performs meaningfully relates to other aspects of their task performance such as speed and ultimate success.

Read more: Esteves, A. Bakker, S., Antle, A., May, A., Warren, J. and Oakley, I. "The ATB Framework: Quantifying and Classifying Epistemic Strategies in Tangible Problem-Solving Tasks". In Proceedings of ACM TEI'15, Stanford, CA, USA. [download article]

-

Our minds don't work in isolation, but operate embedded and embodied in our bodies and the world. To understand the importance of this assertion, we are exploring how physical, tangible interfaces and representations - things that users can reach out and hold - impact user performance in problem-solving tasks such as puzzles and games. We seek to elaborate the ways in which the representation of a problem affects how our minds can conceive and deal with it.

Read more: Esteves, A., Hoven, E. van den and Oakley I. (2013) Physical Games or Digital Games? Comparing Support for Mental Projection in Tangible and Virtual Representations of a Problem Solving Task. In proceedings of ACM TEI 2013, Barcelona, Spain. [download article]

How do you feel online? Emotions are a defining aspect of the social media experience. A growing body of research flags that basic digital interactions like clicking and typing can serve as digital biomarkers for affective states and, in turn, support applications such as diagnosis, therapy or simply improving self-awareness of affective wellbeing. We explore these ideas in the context of social media use in two studies that capture touch, motion and eye-gaze data on a mobile phone while watching media clips and browsing Facebook feeds. We predict self-reported binary affect, valence and arousal with accuracy levels as high as 94%, suggesting that, in the future, social media services could be designed to detect and respond according to the affective state of their users.

Read more: Mintra Ruensuk, Eunyong Cheon, Hwajung Hong, and Ian Oakley. "How Do You Feel Online: Exploiting Smartphone Sensors to Detect Transitory Emotions during Social Media Use."JProc. ACM Interact. Mob. Wearable Ubiquitous Technol. 4, 4, Article 150 (December 2020), 32 pages. [download article]

-

How secure is your phone? It may be easier to unlock than you think. Up to one third of Android pattern locks can be guessed in less than 20 attempts. To improve the security of phone lock systems, we studied the security of free-form gestures as a password modality, ultimately creating policies, such as a blacklist of banned gestures and a meter, that reduce guessability to as low as 10%.

Read more: Eunyong Cheon, Yonghwan Shin, Jun Ho Huh, Hyoungshick Kim and Ian Oakley. "Gesture Authentication for Smartphones: Evaluation of Gesture Password Selection Policies". In Proceedings of IEEE S&P 2020[download article]

Read more: Eunyong Cheon, Jun Ho Huh, and Ian Oakley. "GestureMeter: Design and Evaluation of a Gesture Password Strength Meter". In Proceedings of CHI'23 [download article]

Know more: Watch the Samsung Security Tech Forum 2020 talk or the article in Money Today (in Korean)

-

What does your Facebook profile really say about you? This work connected Uses and Gratifications (U&G) theory, a framework that aims to explain the how and why of media consumption, with data captured from Facebook. Specifically, motives captured via a systematic survey were linked to data summarising both an individuals friendship network and detailed site usage statistics. This work both expands the scope of U&G theory and highlights just how much the data Facebook stores can reveal about you.

Read more: Spiliotopoulos, T. and Oakley, I. (2013) "Understanding motivations for Facebook use: Usage metrics, network structure, and privacy". In proceedings of ACM CHI 2013, Paris, France. [download article]

-

Technology is changing musical consumption, production and performance in unprecendented ways. In particular, social networking sites (SNS) are a 'disruptive force of change' catalysing the consumption, production and dissemination of music on one hand and on the sociality it enables or disables on the other. This project seeks a deeper understanding of the impact of social technologies on musical practices can inform design of novel paradigms of social interaction online and offline.

Read more: Karnik, M., Oakley, I., Venkatanathan, J., Spiliotopoulos, T. and Nisi, V. (2013) "Uses & Gratifications of a Facebook Media Sharing Group". In proceedings of ACM CSCW 2013, San Antonio, Texas. [download article]